At DEF CON this year, I had the opportunity to participate in a capture the flag (CTF) competition that focused on industrial control systems (ICS). For those who don’t know, DEF CON is one of the most widely attended security/hacker competitions in the world, hosted annually in Las Vegas. Security CTFs are competitions that involve using a wide variety of offensive and forensic security techniques to solve challenges and puzzles—these competitions usually are done as individuals or teams and generally for bragging rights or prizes. I worked on this CTF on a team with some old friends… we hadn’t really planned on participating, so we got a late start, but we had a lot of fun. In this blog post, I’m going to walk you through a couple of the scenarios from the CTF, and some of the real-world implications.

As I wrote in a previous blog post for DomainTools, ICS can be broadly defined as any system that uses computer software to control physical systems, such as industrial machinery. ICS are often designed using embedded processors that are real-time systems, unlike traditional multiprocessing type systems. Real-time systems are used where timing applications are critical. Typical computing platforms allow the operating system kernel to swap out or delay applications based on demand, usage, security, or other concerns. Many traditional security and information assurance tools such as antivirus or encryption introduce unacceptable delays for real-time, embedded systems. These systems place the physical safety of the machinery and operatives as a higher priority than information security needs.

The CTF that my friends (Bridget and Kev, both Incident Response folks at Facebook) and I participated in was the Red Alert ICS CTF. Scenarios in this competition included: interfering with airport control, disrupting electricity generation and distribution, interfering with railroad control, altering chemical plant PLCs and HMIs, and altering water treatment plant PLCs and HMIs. Within those scenarios, there were challenges that including analyzing and exploiting protocols like Modbus, distributed network protocol v3 (DNP3), Bluetooth, and Zigbee, and it had specific challenges for exploiting airgapped machines, exploiting real human machine interfaces (HMIs) and programmable logic controllers (PLCs), and doing social engineering.

On a table at the front of the room, the CTF organizers had a miniature city, an airplane that appeared to be in flight, several small PLCs, at least 2 real PLCs, and several bluetooth devices (including a Google Pixel and Google Nexus 5x).

CTFs have flags scattered throughout the challenges in the competition. In this particular CTF, the flag format looked like RACTF{some-value-here}.

Modbus Challenge

ICS Protocols like modbus and DNP3 offer very little in terms of security, authentication, encryption, and other protection measures. This makes them highly vulnerable to attack when attached to public networks, however, these types of protocols are also fairly obscure and domain specific.

From www.modbus.org:

Modbus is used in multiple master-slave applications to monitor and program devices; to communicate between intelligent devices and sensors and instruments; to monitor field devices using PCs and HMIs. Modbus is also an ideal protocol for RTU applications where wireless communication is required. For this reason, it is used in innumerable gas and oil and substation applications. But Modbus is not only an industrial protocol. Building, infrastructure, transportation and energy applications also make use of its benefits.

Modbus is an open protocol, meaning that it’s free for manufacturers to build into their equipment without having to license or pay royalties. It is typically used to transmit signals from instrumentation and control devices back to a main controller or data gathering system. As a protocol, modbus devices identify only by a unit id. Each unit has a number of inputs, outputs, and storage types, that are fairly limited and are described in terms of discrete inputs, coils (outputs), input registers, and holding registers. These different data types typically represent analog values present in the real world, either as sensor readouts or actuator values, and can either be 1 bit (a 0 or 1) or 16 bits (usually about 2 letters). In the real world, in most cases, you won’t know what writing to or reading from a particular coil or register unless you are a system operator. If you were attacking a real modbus device, you likely would not know what writing or reading to a particular input or register meant unless you had specific knowledge about the implementation of the system the PLC is measuring or controlling.

Based on this background knowledge, when we found a modbus service, we expected to read or write to the modbus service and have a flag show up somewhere else (since we know modbus has this pattern where it’s representing analog values in the real world). We were given instructions not to try to write new values to the PLC, so we started by reading the holding registers. What we found there was mostly binary data, but we did notice a pattern that we recognized:

If you don’t know anything about binary files, you might not guess that the “PNG” in the above blob means this binary data is a portable network graphics (PNG) image format. If we open this in an image editor, we see:

In order to extricate this, we wrote a simple python program to read from modbus and gradually worked on figuring out how long the file was (based on error conditions).

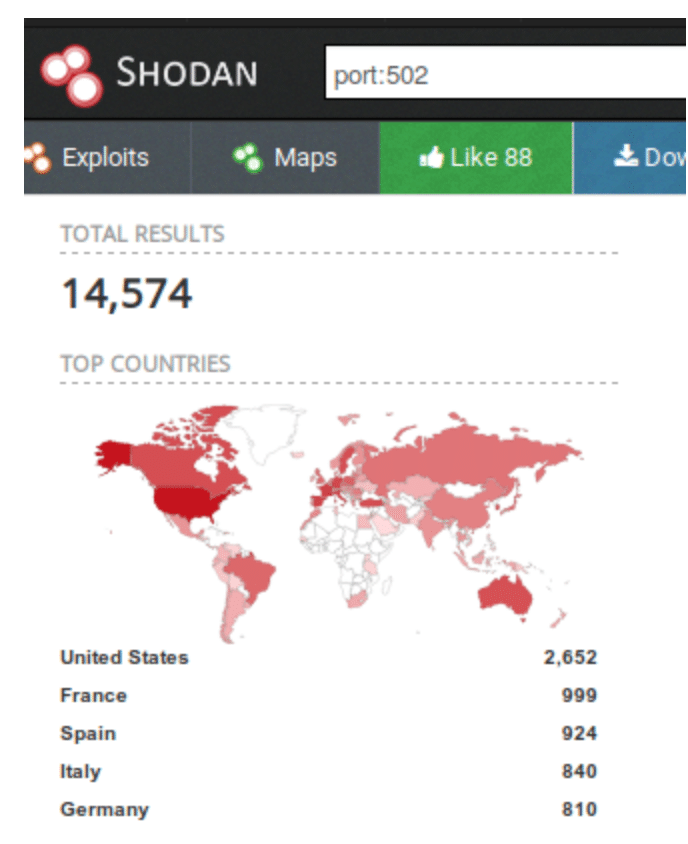

Modbus devices, when connected directly to the Internet or to another unsecured network do pose a security risk. These devices on their own have virtually no security features and often control or reflect processes that are happening in the physical world, therefore changing values on them could theoretically have serious consequences. A quick search of the device search engine Shodan shows over 14,000 of these devices that can be directly accessed from the public Internet.

However, it’s important to put these risks in context. Though modbus devices have no inherent security, they do gain some security by obscurity. In principle, this is not the type of security we want to rely on, but it also means the sky is not falling. PLCs that control industrial applications may not have many information security safeguards, but those industrial applications are often required by law to have numerous safety fallbacks. Thus, while it may be easy to create a certain amount of havoc and inconvenience by writing to these devices remotely, it is unlikely (though not impossible) that an attack on one would cause a catastrophic failure of an industrial process. The safety and redundancy in place behind these devices (in physical plant operations, for example) is the primary line of defense.

With that in mind, organizations that manage these devices should follow best practices, including network segmentation, to remove direct access to these machines. In addition, monitoring communications to and from these devices for anomalous communication can move the needle in terms of detecting compromises or potential compromises to industrial control networks.

Additional information on securing modbus and other ICS devices: https://ics-cert.us-cert.gov/Recommended-Practices

Power Grid Challenge

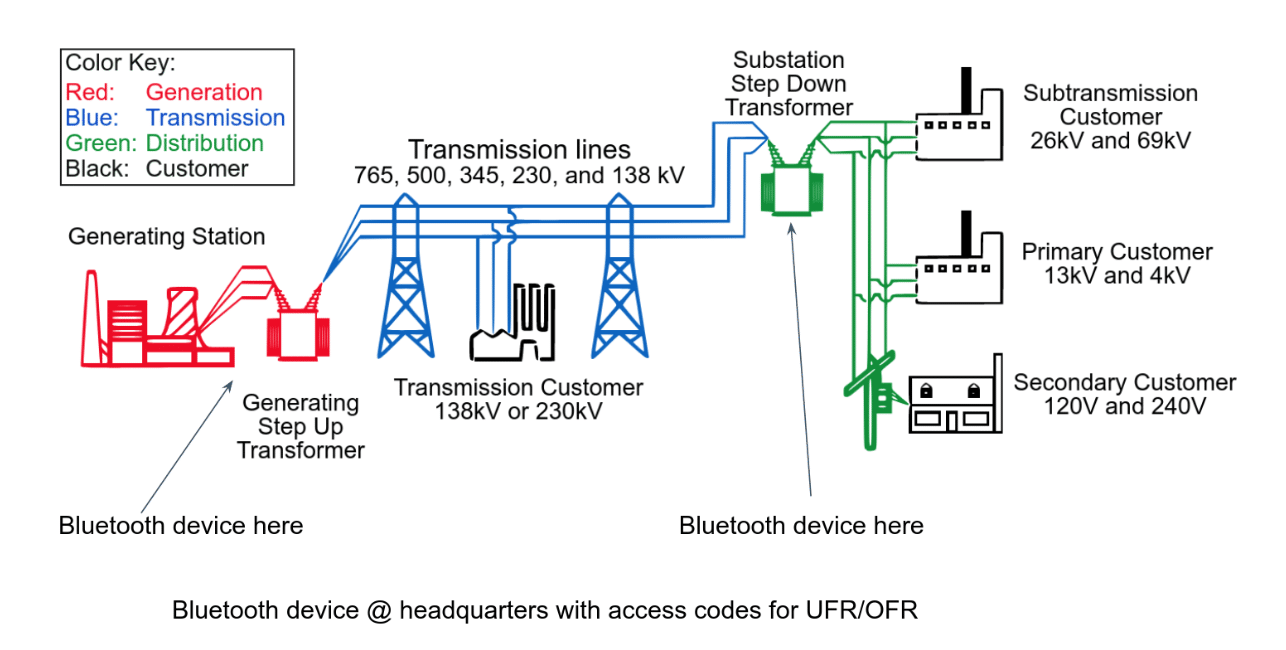

The premise of the power grid scenario had some built in assumptions. We deduced that a bluetooth low energy device that stores credentials and access codes existed at some headquarters—this device was also in charge of sending automated SMS when grid parameters require intervention. It also assumed that key personnel at generation and distribution stations used android phones to get status messages.

We deduced all this from clues and provided packet capture files, which are raw dumps of conversations between (in this case) bluetooth devices. We were told that a malicious actor had been able to capture and leak these bluetooth sessions between operator phones and the BLE device at HQ.

Though bluetooth is not generally depended on in core electricity generation and distribution infrastructure, a little research shows that this scenario might not be too far fetched:

http://eecatalog.com/wireless/2017/09/07/bluetooth-5-expands-into-the-smart-grid/

We made a mental model of what this might look like based on what we were told and deduced about the scenario, which looks something like the below:

In the scenario, the headquarters device held over frequency response (OFR) / under frequency response (UFR) codes. OFR/UFR are a little over my head, but essentially they are codes that would communicate that power is Over Frequency Response or Under Frequency Response. The receipt of an access code for this information would trigger an operator to compensate.

The goal of the scenario was to send the OFR code to one operator and the UFR code to the other operator, causing them to compensate against each other and shut down both power generation and distribution.

For a more detailed discussion of OFR/UFR in the prevention of islanding, see:

To access the headquarters bluetooth device, we found the bluetooth mac address in the pcap file, used sdptool to contact the bluetooth devices via serial and do service discovery and extracted UFR/OFR codes from serial output.

In the scenario, it was leaked that the right code sent to an operator phone would trigger action. However, we don’t know any of the operator’s phone numbers. BUT we do know where they are (physically, they were on the table) and we know their phones’ MAC addresses (from the packet capture I mentioned earlier).

To try to get these phone numbers, we first tried the old, old, old utility “bluesnarfer” to pull phone books, which did not work. Then we hunted down this fairly new(ish) vulnerability from Sept 2017 called “blueborne.”

We used android proof of concept exploit code to get a remote command prompt on the two android devices. The vulnerability we used is described on the discloser’s site:

This vulnerability resides in the Bluetooth Network Encapsulation Protocol (BNEP) service, which enables internet sharing over a Bluetooth connection (tethering). Due to a flaw in the BNEP service, a hacker can trigger a surgical memory corruption, which is easy to exploit and enables him to run code on the device, effectively granting him complete control. Due to lack of proper authorization validations, triggering this vulnerability does not require any user interaction, authentication or pairing, so the targeted user is completely unaware of an ongoing attack.

Using this code, we were able to get a remote command prompt and download the phone books from these devices. We then had the numbers for devices on the generation and distribution sides to send the OFR/UFR codes to. An example of how we did this can be seen in the Armis demo video. When we sent the OFR/UFR codes to the two different devices, it was explained to us that it was equivalent to shutting the power down for the town (and we set off a siren). The CTF organizers were quite excited and got a picture of me and one of my teammates in hardhats:

There has been a wide variety of somewhat speculative reporting on compromises to the United States power grid in recent years. Opinions generally range from “all of our devices are already compromised” to “we’re safe.” In my previous life as a Cybersecurity Analyst working for a Department of Energy National Laboratory, I had the privilege of speaking with a number of folks who work in the energy delivery and distribution world. The overwhelming sense I got from those conversations is that we don’t know enough about the baseline of Internet connected power systems to be able to do effective anomaly detection. However, our electric grid was built and operates as a distributed system. Security controls and implementations at different points in the grid may be quite different, as may usage and implementation of ICS systems. This means its highly unlikely that an exploit that works on one part of the grid will be transferable to others. Additionally, the grid needs to compensate for fluctuations in demand and generation—this means that different parts of the grid are already in a sense prepared to deal with anomalies in the parameters of their neighboring counterparts.

Additional information: https://www.npr.org/2018/03/16/596057713/infiltrating-power-grid-would-be-difficult-heres-why

https://www.darkreading.com/endpoint/power-grid-security-how-safe-are-we/a/d-id/1332420

http://fortune.com/2017/09/11/dragonfly-2-0-symantec-hackers-power-grid/

This isn’t to say we shouldn’t take vulnerabilities in, or threats to the power grid seriously, but often fear, uncertainty, and doubt (FUD) are the enemy of good, rational, measured security policy. There are things we can do about industrial control systems security, and more that we can do to secure a resilient future for our power grid. In many cases, what operators are already doing—relying on their strengths in improving the safety of failure modes of the systems connected to these ICS devices—are a good start. FUD tends to lead to inaction, as we believe our situation is unfixable… we need to take incremental steps toward creating a more secure landscape for power generation and distribution.

Conclusions

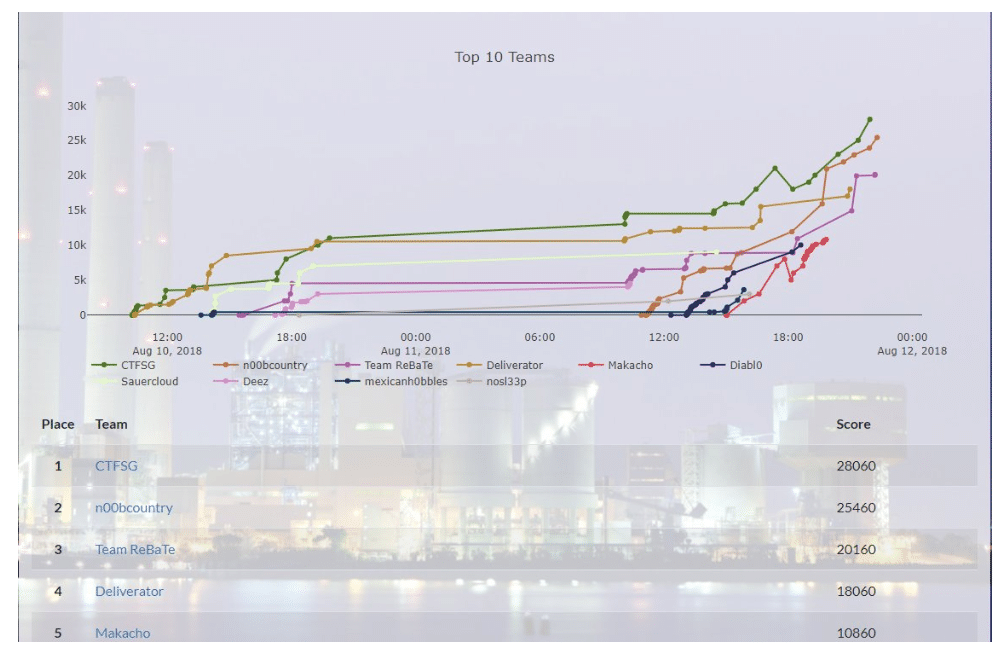

We started the competition late—it started on Friday at 10am and we began participating at 11am on Saturday. By the end of the day Saturday, we were in first place. In the last two hours of the competition Sunday morning, one of the other teams pulled ahead of us. Still, for starting late, we did pretty well. Out of 30+ teams, our team, “n00bcountry” spiked fast.

Industrial control systems like the ones in this competition control much of the machinery and industrial processes in our modern, connected world. As security practitioners, it’s important to understand and interact with these types of systems, as they often have different capabilities and characteristics than our typical information technology systems. It is also important to keep in mind the true vulnerabilities and difficulties in exploiting these types of systems. Many of these systems are quite vulnerable, but it is not always trivial to exploit them in practice, as their configurations and interactions are often quite opaque, and the physical safeguards put in place make many of the logical exploits less important.